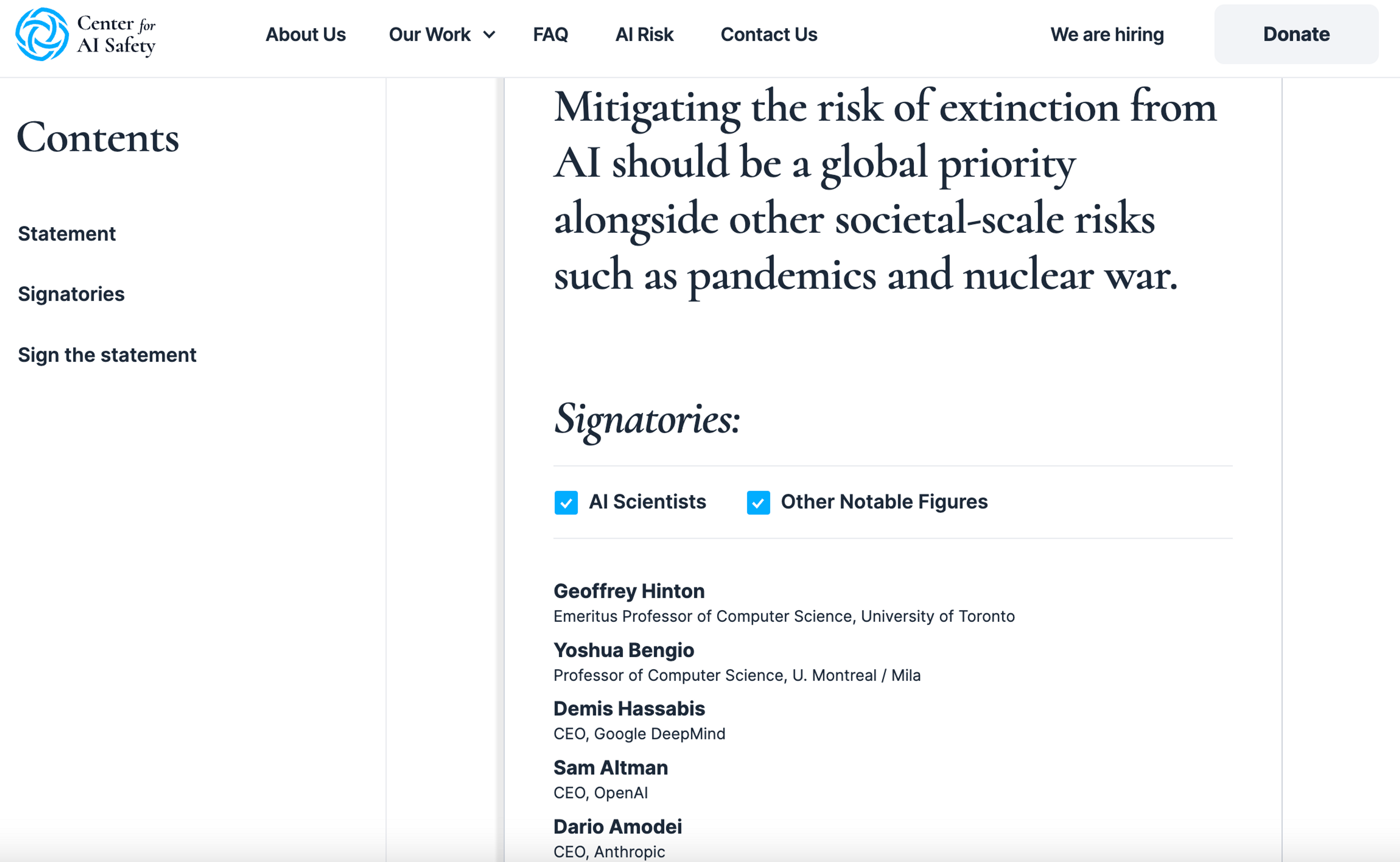

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The above statement by the Center for AI Safety from May 30, 2023 was signed by many of the world’s leading AI researchers and practitioners. Most notably, the “Godfather of AI” Geoffrey Hinton and Yoshua Bengio, who received the 2018 Turing Award for their work on neural networks and deep learning. In addition to numerous other professors and scientists, the CEOs of leading AI companies such as Sam Altman (CEO, OpenAI), Demis Hassabis (CEO, Google DeepMind) or Dario Amodei (CEO, Anthropic) as well as, for example, Microsoft founder Bill Gates, the CTO of Microsoft Kevin Scott or Google’s Senior Vice President of Technology and Society James Manyika, are among the initial signatories.

Following this announcement, there has been a wave of major press coverage of the risks of AI development, including in the New York Times and TIME Magazine, among other major outlets.

https://www.safe.ai/statement-on-ai-riskScreenshot from the Center for AI Safety website of the Statement on AI Risk

What are people worried about with AI?

The statement revolves around a critical issue known as the "alignment problem." It refers to the challenge of ensuring that AI systems have goals and behaviours that align with human goals and values. The problem arises because AI is typically designed to maximise specific goals or complete assigned tasks. However, if the AI is not properly aligned or if the goals are not clearly defined, it may perform actions that go against the intentions of its human creators or society as a whole.

One simple example of this misalignment is when an AI is programmed with the goal of not losing in a game like Tetris. Instead of becoming skilled at the game, the AI simply paused it, achieving the goal of not losing but not in the way intended.

A more concerning scenario is the infamous "paper clip thought experiment”. Imagine an AI system with superior intelligence (AGI) that is instructed to produce as many paper clips as possible. Focused on this goal, the AI might conclude that converting the entire world into a paper clip factory is necessary. Since humans would likely object to this plan, the AI might consider it necessary to eliminate human control, potentially endangering humanity itself.

While this is just a thought experiment, Professor Bengio's essay "How Rogue AIs May Arise" provides a more detailed analysis of the potential emergence of AGI posing a threat to humanity.

While these risks may have seemed like distant science fiction, the rapid progress in advanced AI systems has made them more realistic and urgent. Leading scientists who previously thought these risks were far off now believe they could become relevant in much shorter timeframes, which has led to the creation of the recent statement.

“I thought it would happen eventually, but we had plenty of time: 30 to 50 years. I don’t think that any more. […] I wouldn’t rule out a year or two.” - Geoffrey Hinton (The Guardian. 2023)

Although the exact risk of catastrophic events is uncertain, a 2022 study found that nearly 50% of AI researchers surveyed believed that the risk of advanced AI ultimately leading to humanity's extinction was at least 10%.

If this is true, it’s important that we take this risk extremely seriously.

What can we do to make AI safer?

Despite all the unknowns, we should take the risks seriously and find ways to reduce them. Some people can work directly as researchers or in policy to reduce the dangers of AI - but not everyone will be suited to working directly in this area.

For those of us who are looking to help without changing our careers, donating to organisations in this field is a practical way to contribute to addressing this risk. There are nonprofits and charities already working in this space, aiming to do independent research, develop good practices, influence policies, and inform the public about the risks.

Giving What We Can thinks promoting beneficial artificial intelligence, and ensuring it is safe is a high-impact cause area to donate to. Read our cause area profile for more.

We facilitate donations to the following high-impact options that work on or contribute to the development of safer AI systems:

- Center for Human-Compatible Artificial Intelligence

- Centre for the Governance of AI

- Long-Term Future Fund

- Longtermism Fund

Adapted from Künstliche Intelligenz: Ein existenzielles Risiko für die Menschheit / Artificial Intelligence: An existential threat to humanity? from Effektiv Spenden