Micronutrient Fortification

Giving What We Can no longer conducts our own research into charities and cause areas. Instead, we're relying on the work of organisations including J-PAL, GiveWell, and the Open Philanthropy Project, which are in a better position to provide more comprehensive research coverage.

These research reports represent our thinking as of late 2016, and much of the information will be relevant for making decisions about how to donate as effectively as possible. However we are not updating them and the information may therefore be out of date.

Cause Area: Micronutrient Deficiencies

Differences between micronutrient supplementation and fortification

To begin, note that there is a difference between micronutrient supplementation and micronutrient fortification. Micronutrient supplementation is here defined as taking capsules or foodstuffs (such as biscuits) that are specifically made to increase micronutrient status of the person eating it, whereas fortification means that commonly eaten staple foods are enriched with micronutrients. Although the aim of this report is to examine the effectiveness of micronutrient fortification, the close relation between supplementation and fortification means that sometimes results from micronutrient supplementation can provide insight into the effectiveness of fortification. Supplementation of all foods is often not as viable or as cost-effective as fortification. Also a recent study suggests that in pregnant adolescents, prenatal supplements cannot fully compensate for preexisting dietary deficiencies[^fn-1]; thus, even if supplementation could be cost-effectively distributed to whole populations, it might still be less effective at improving nutritional outcomes than a continuous diet of enriched food by means of micronutrient fortification. Finally, there is also biofortification, which is increasing the micronutrient content of plants directly, which will not be discussed here.

General considerations of micronutrient fortification

A recent systematic review of 201 studies on the impact of micronutrient fortification of food and on maternal and child health1, concludes that fortification is promising, but because of high burdens of diarrhoea and intestinal inflammation, widespread malabsorption may decrease its effectiveness. Further, even though fortification is potentially an effective strategy, evidence from the developing world is scarce and future programs should measure the direct impact of fortification on morbidity and mortality.

Epidemiology of malnutrition

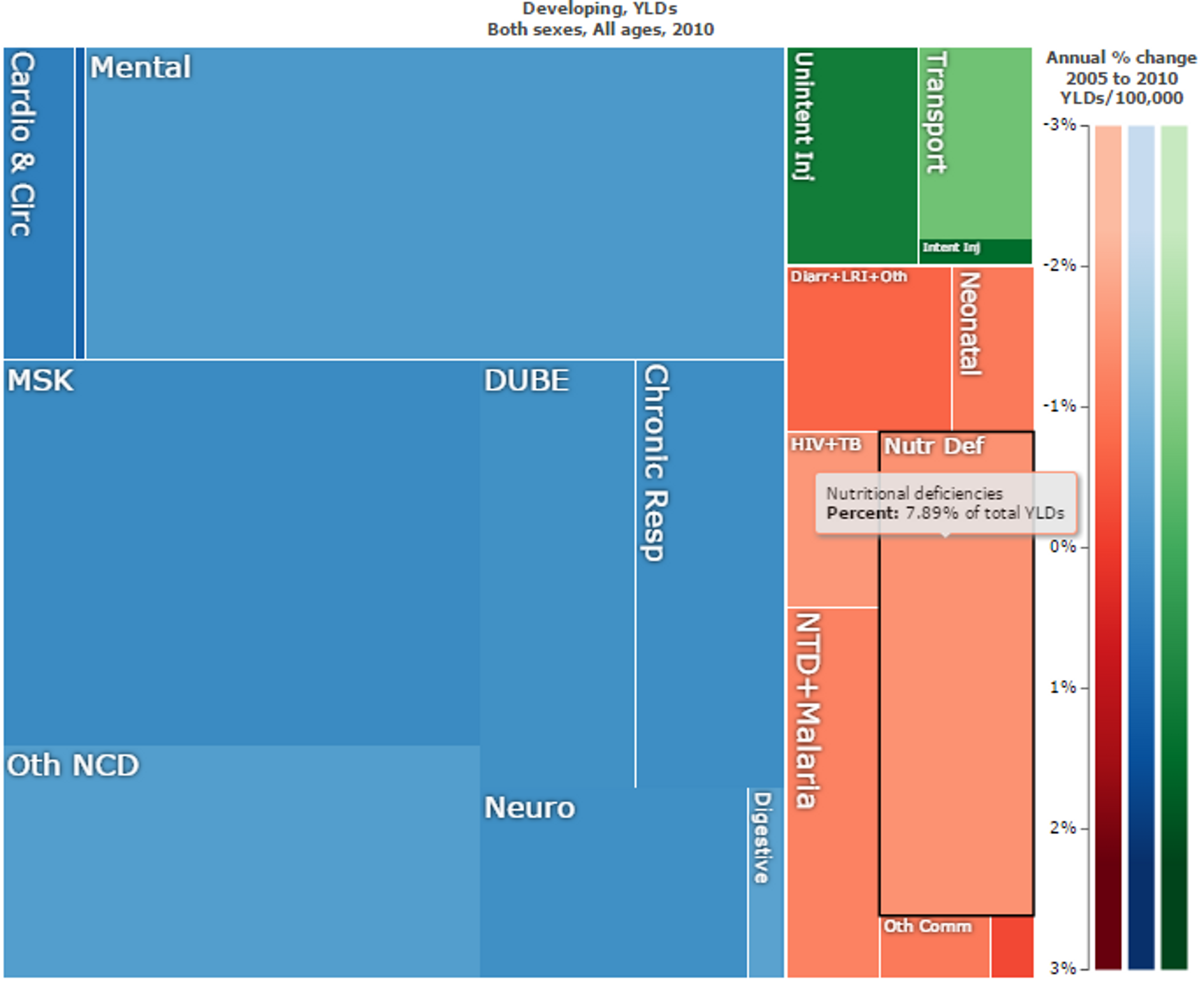

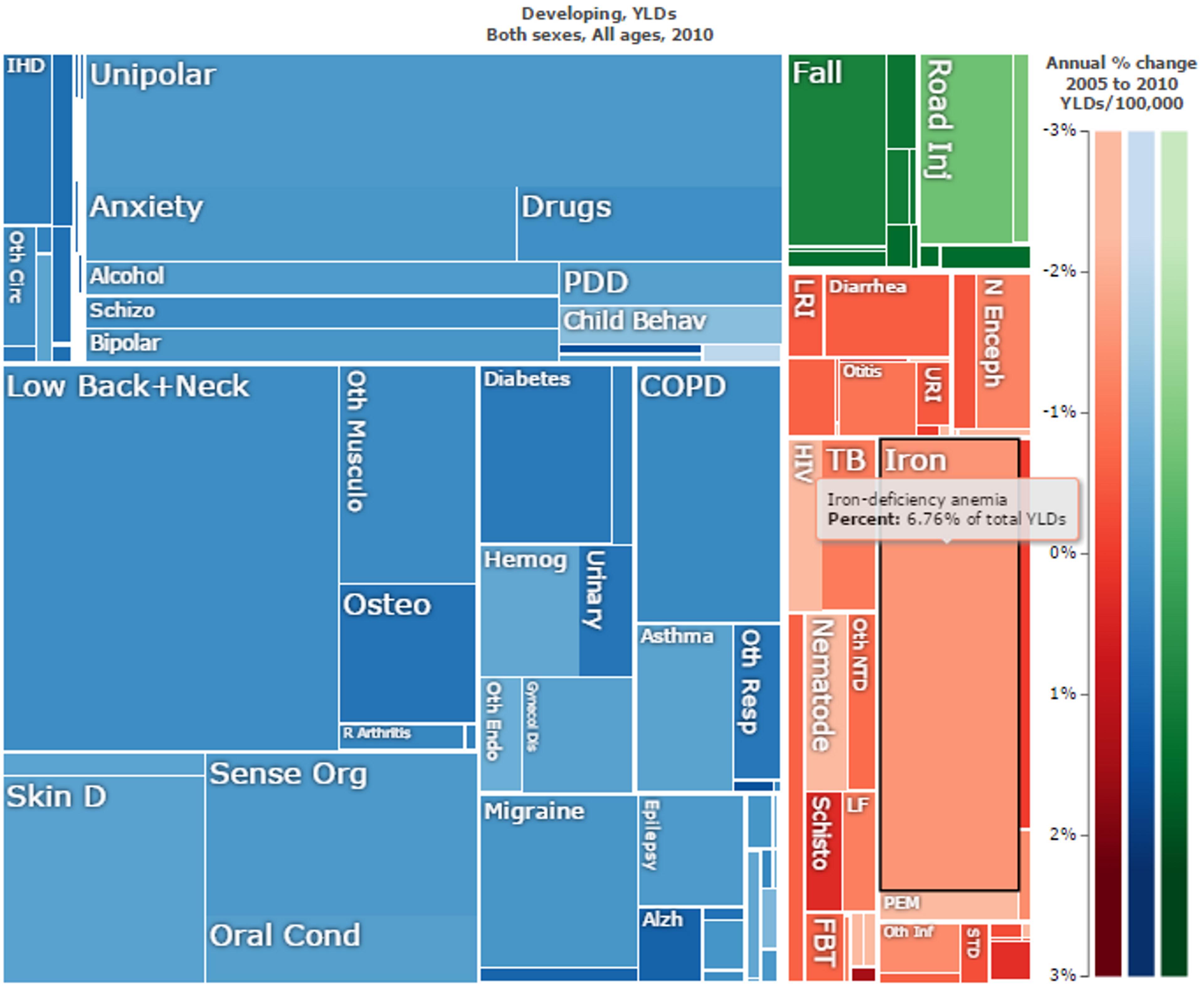

While being underweight is the number-one contributor to the burden of disease in sub-Saharan Africa23, nutritional deficiencies make up a large part of the overall direct disease and disability burden in developing countries (see Figure 1). However, nutritional deficiencies are linked to other diseases (see Figure 2), and so over 50% of years lived with disability in children can be traced to these deficiencies45.

Figure 1: Overall “Years Lost due to Disability (YLD)” in developing countries. Nutritional deficiencies are marked in black and make up 7.89% of the total YLDs. Figure generated with ‘Global Burden of Disease Compare tool’- see http://ihmeuw.org/3a5f. © 2013 University of Washington - Institute for Health Metrics and Evaluation (Global burden of Disease data 2010, released 3/2013)

Cost-effectiveness of different micronutrient fortification interventions

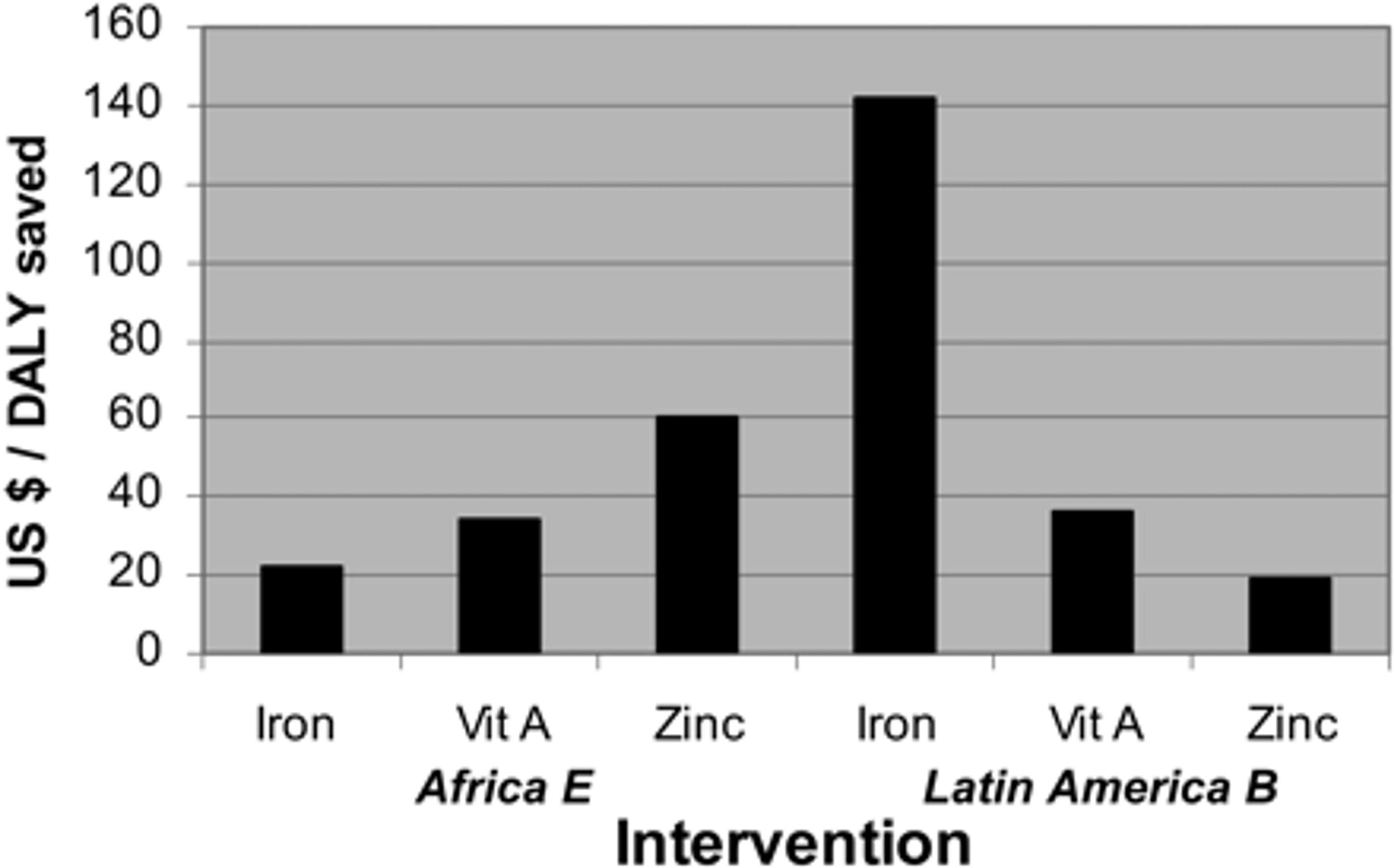

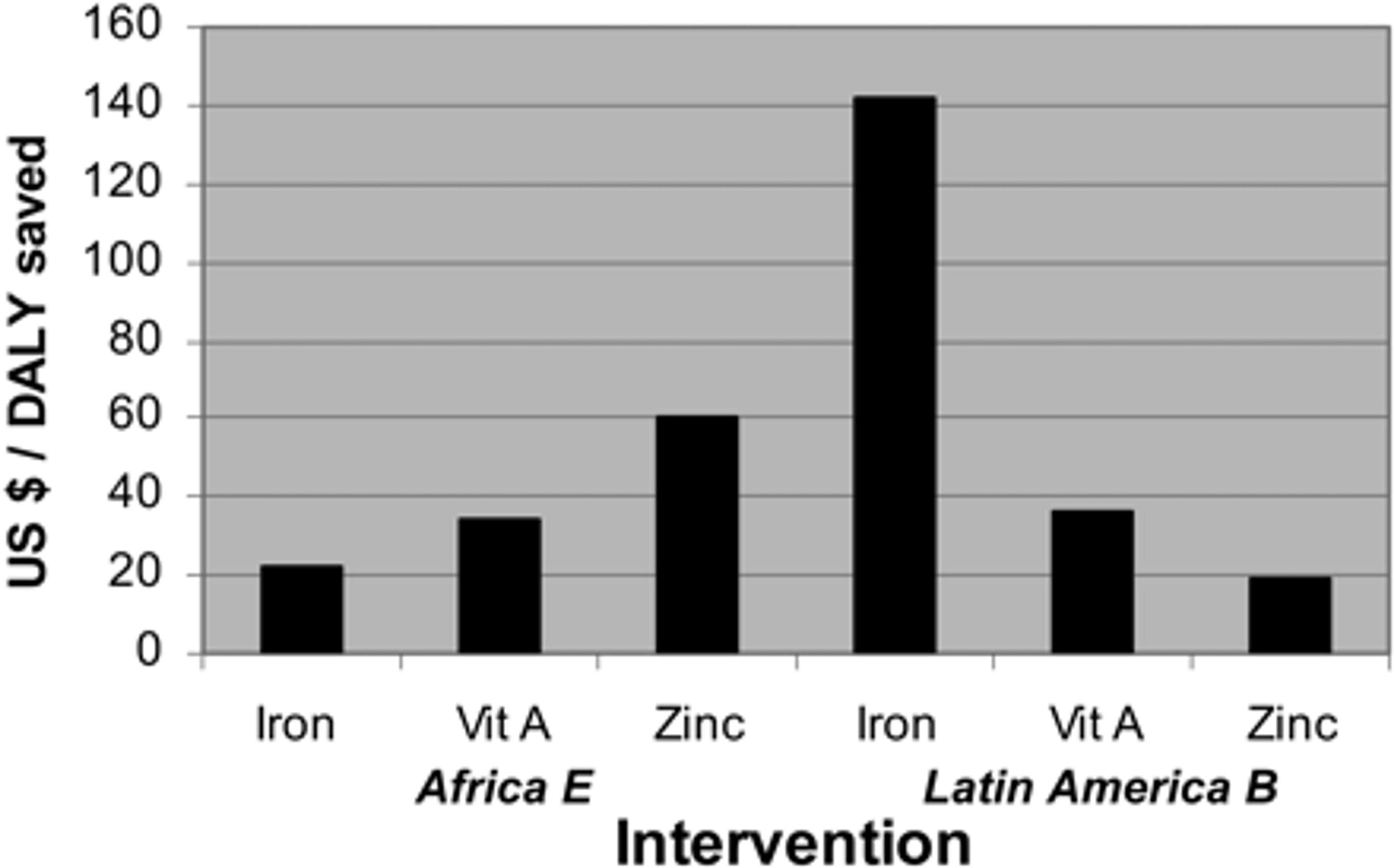

Even though there are some uncertainties with regard to estimating the cost-effectiveness of micronutrient fortification programmes and there is variation in cost-effectiveness across programmes6, fortification is generally considered to be a very cost-effective intervention. Early estimates suggested that fortification with iron, vitamin A and zinc were well below $100 per DALY averted (see Figure 2).

Figure 2: Comparison of cost effectiveness of fortification in East Africa. Figure adapted from7

A 2006 report from the Disease Control Priorities Project suggests that overall cost-effectiveness is US$66 to US$70 per DALY averted for iron fortification programs and US$34 to US$36 per DALY averted for iodine fortification programs8.

There is now a vast literature on the cost effectiveness of micronutrient fortification. A recent review article9 on the economics of nutrition summarizes the evidence of cost-effectiveness for different micronutrient programmes (see Table 2 of this review for a summary of research papers on the topic10). Two studies from this paper provide $ per DALY averted estimates of fortification programmes:

- Folic acid fortification in Chile: costs per neural tube defect case and infant death averted were I$1,20011 and I$11,000, respectively; cost per DALY averted was I$89; net cost savings of fortification were I$2.3 million

- Vitamin A fortification of edible oils and sugar in Uganda: Cost per DALY averted is US $82 for sugar fortification and $18 for oil fortification

A 2009 review by John Fiedler and Barbara MacDonald12 provides cost-per-DALY-averted estimates for micronutrient fortification programmes in many countries. Although these were not programmes implemented by PHC, they were adopted in many of the same countries where PHC operates. Thus, these data provide some indication of likely cost effectiveness levels that can be expected for similar programmes implemented by PHC in these same countries. Tables 1 and 2 are adapted from Fiedler and MacDonald’s review to show only countries in which Project Healthy Children is currently operating. These estimates suggest high cost-effectiveness at around $100 per DALY averted, similar to previous high cost-effectiveness estimates of fortification programmes in other countries (see Table 1). Table 2 summarizes key statistics for micronutrient programmes in a number of countries in which PHC operates, along with each programme’s ranking among worldwide programmes in terms of cost effectiveness. For instance, wheat fortification in Malawi was the 16th most cost-effective food fortification programme in the world. Taken together, these data indicate that PHC operates in countries where micronutrient fortification programmes have proven to be cost-effective. It should be noted, however, that this table is from 2009, and it is possible that many of the most cost-effective programmes (i.e., the ‘low hanging fruit’) have in the meantime been executed.

| Country | Cost per DALY averted (US$) | |||||

| Sugar | Vegetable oil | Maize flour | Wheat flour | |||

| Reduced package | Expanded package | Reduced package | Expanded package | |||

| Burundi | — | 106 | — | — | — | — |

| Malawi | 223 | 105 | 183 | 120 | 24.61 | 10.81 |

| Rwanda | 225 | 68 | — | — | — | — |

| Sierra Leone | — | 69 | — | — | — | — |

| Zimbabwe | 33 | 487 | 2232 | 1060 | 42.54 | 31.78 |

Table 1: Total incremental 10-year cost and cost per DALY averted arrayed alphabetically by countries in which PHC operates in. Figure adapted from13. Full table here14

| Rank | Country and food | Global Burden of Disease Region | Total cost (US$) | Cost per DALY saved (US$) | Cumulative total cost (US$) |

| 16 | Malawi—wheat | AFR_E | 1,344,446 | 25 | 24,074,848 |

| 21 | Zimbabwe—sugar | AFR_E | 2,218,527 | 33 | 139,567,326 |

| 30 | Zimbabwe—wheat | AFR_E | 1,872,396 | 43 | 252,005,760 |

| 46 | Rwanda—oil | AFR_E | 6,623,699 | 68 | 482,470,004 |

| 47 | Sierra Leone—oil | AFR_D | 10,601,950 | 69 | 493,071,954 |

| 52 | Malawi—oil | AFR_E | 9,738,123 | 105 | 733,345,516 |

| 54 | Burundi—oil | AFR_E | 5,500,917 | 106 | 788,260,013 |

Table 2: The ranking of the countries in which PHC is active from a table of the 60 countries’ micronutrient interventions arrayed by cost per DALY averted. Table adapted from15. Full table here16

To sum up, even though these cost-effectiveness estimates are subject to limitations (i.e. up-to-date cost-effectiveness estimates of the exact fortification programmes that PHC conducts are unavailable, and cost-effectiveness estimates are sometimes uncertain and difficult to compare), we believe that, overall, the available evidence suggests PHC’s cost-effectiveness is roughly similar to the reported estimates — in other words, that PHC’s programmes are highly cost-effective.

Economic effects

Nutritional deficiencies have been shown to have negative economic consequences. Our summary of these effects draws from several recent studies, including the Global Nutrition Report 201417. Note that micronutrient deficiencies only account for part of the economic losses from nutritional deficiencies—macronutrient deficiencies (i.e. hunger) also contribute to these losses.

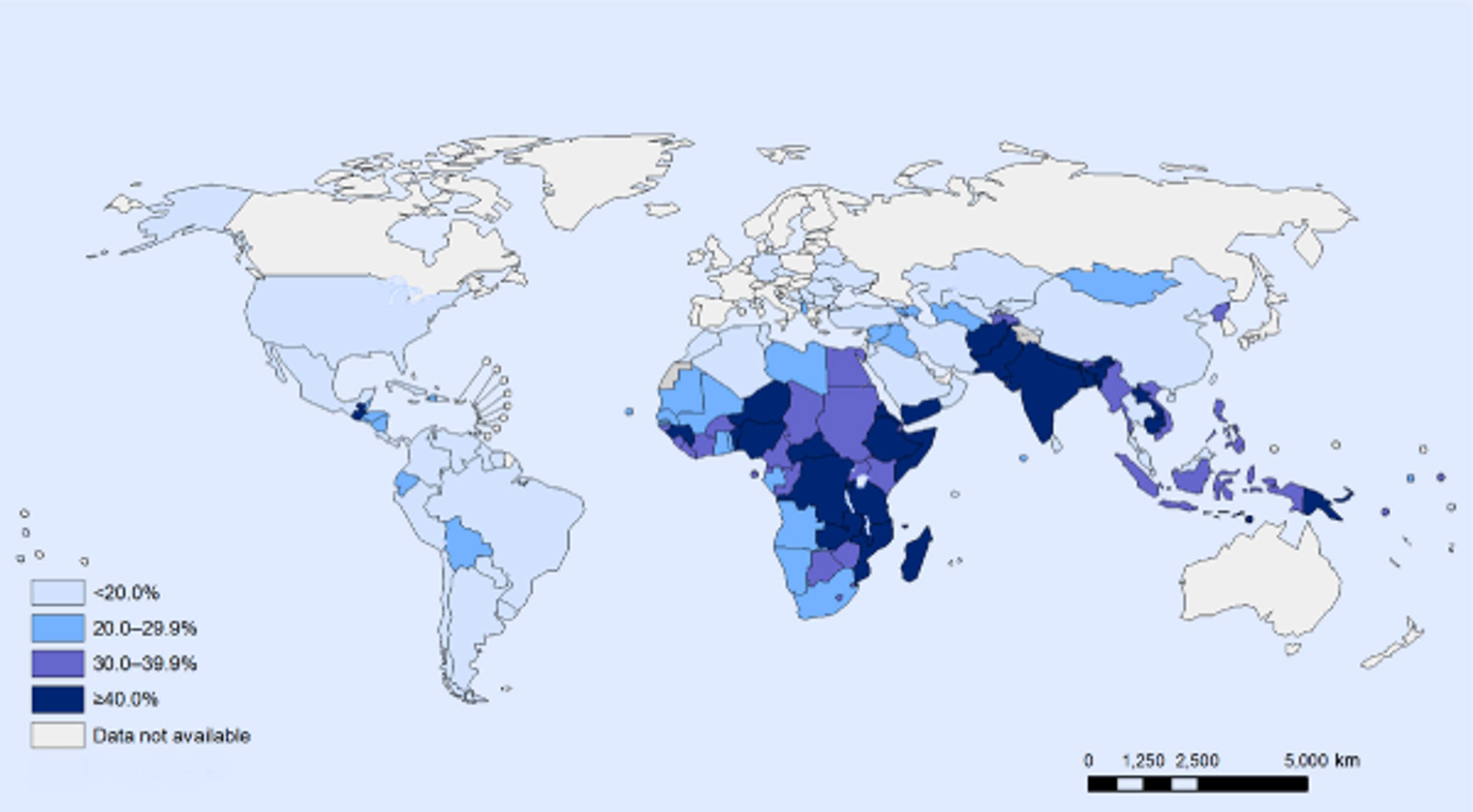

Labour productivity lost

A study from Guatemala suggests that preventing undernutrition in childhood increases productivity in several ways. Specifically, preventing undernutrition increases hourly earnings by 20% and wage rates by 48%. Moreover, children treated for undernutrition are 33% more likely to escape poverty, while treated girls are 10% more likely to own a business as adults1819. Stunted growth, or stunting, is a reduced growth rate in human development due to nutritional deficiencies and is highly prevalent in developing countries (see Figure 10) and a significant contributor to lost productivity. Analyses suggest that growth failure in early life has profound adverse consequences over the life course on human, social, and economic capital20. One study in particular showed that one extra centimeter of adult height corresponds to a 4.5% increase in wage rates2122. Low birth weight is also associated with increased risk of hypertension and kidney disease in later life; however, micronutrient supplementation during pregnancy reduces the risk of low birth weight and prematurity23.

Figure 3: Stunting among children under 5: latest national prevalence estimates. Figure taken from24

Macroeconomic Impact

Economic analyses suggest that undernutrition within a country lowers the overall economic productivity of that country. Specifically, undernutrition has been suggested to lower GDP for Egypt by 1.9%; Ethiopia, 16.5%; Swaziland, 3.1%; and Uganda, 5.6%25. Asia and Africa lose 11% of GNP every year owing to poor nutrition26. One recent study suggests that, in Cambodia, malnutrition costs more than US $400 million annually, corresponding to -2.5% of the country’s GDP. In Pakistan, protein malnutrition, iodine deficiency and iron deficiency collectively accounted for about 3–4% of gross domestic product (GDP) loss annually2728. A study of 10 developing countries suggest that iron-deficiency anaemia causes an average loss of 4.5% of GDP2930.

A recent World Bank study estimated that investing in nutrition can increase a country’s GDP by at least 3 percent annually31. The same study concluded that global benefit-to-cost ratio of micronutrient powders for children is 37 to 1; of deworming it is 6 to 1; of iron fortification of staples it is 8 to 1; and of salt iodization is 30 to 132.

We have calculated the average benefit-to-cost ratio of fortification programmes in the countries where PHC is active. Using the World Bank’s estimates of the economic on GDP loss annually to vitamin and mineral deficiencies alone33, we have calculated34 that the average benefit-to-cost ratio in these countries is about 23:1. In other words, the cost of scale-up for fortification programmes is 23 times smaller than the economic benefits. Other researchers have found similarly high estimates of cost-effectiveness: a recent paper35 looked at the value of stunting-reducing nutrition investments, such as micronutrient fortification, in 17 high-burden countries. The benefit-to-cost ratios ranged from 3.6 (Democratic Republic of Congo) to 48 (Indonesia) with a median value of 18 (Bangladesh). Another recent paper 3637 finds similarly impressive benefit-cost ratios for iodizing salt (80), iron supplements for mothers and small children (24), vitamin A supplementation (13), and zinc supplementation for children (3). Thus, these estimates in the literature are broadly comparable to our calculations.

Effects of micronutrients for mental development

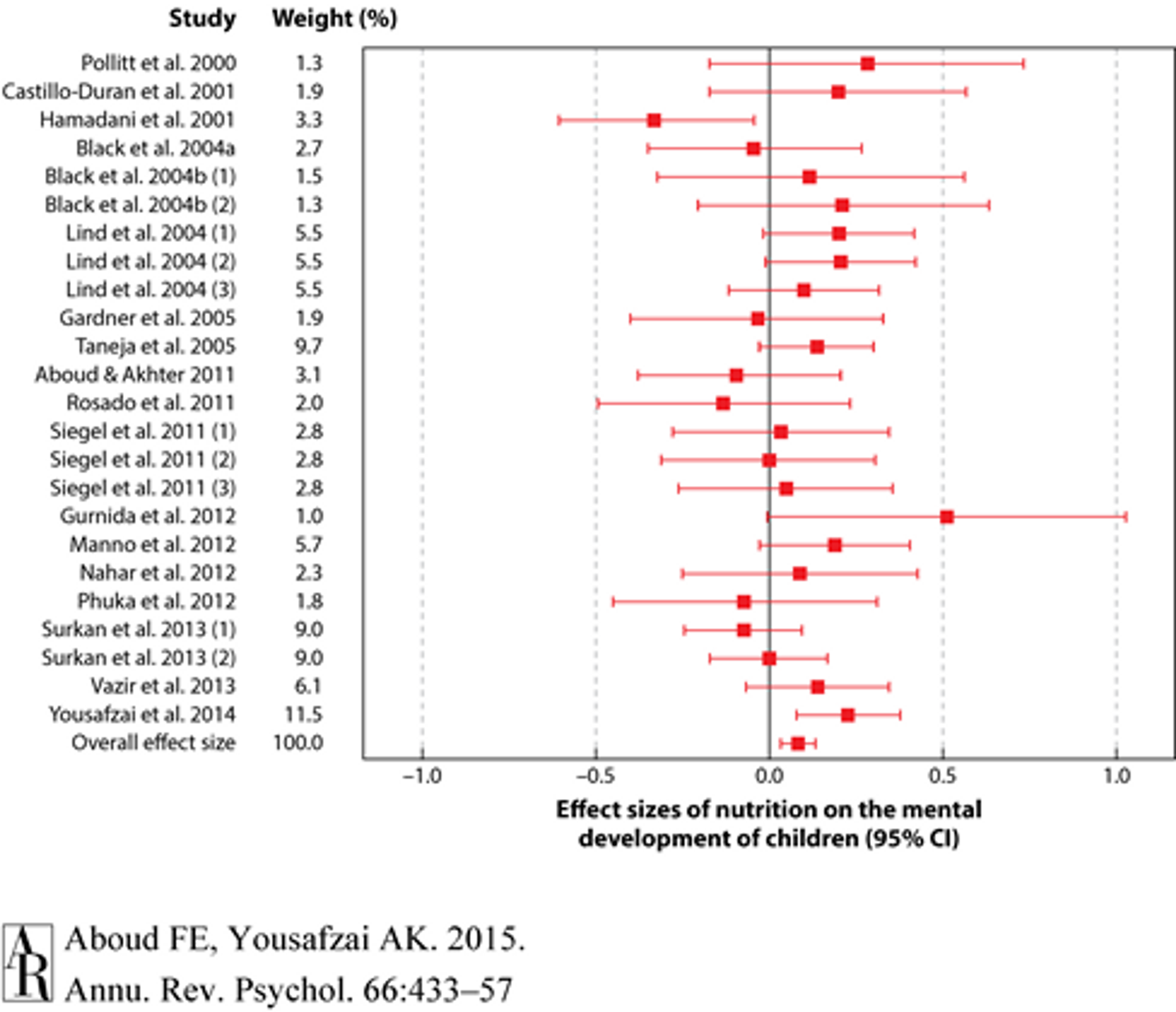

A review and meta-analysis of 21 interventions examined the effects of multiple micronutrients on mental development, and yielded a very small but significant overall effect size of d = 0.09 3839 (see Figure 4). However, even small effect sizes can translate to very high cost-effectiveness, so long as the effects are robust and the interventions are very cheap to implement. It is very difficult, however, to estimate the exact cost-effectiveness.

Figure 440: Forest plot for effect sizes (standard mean difference represented as a red square and 95% confidence interval represented as red lines) of nutrition on the mental development of children. Overall effect size was 0.086 (95% CI 0.034, 0.137).

Moreover, another recent study showed that, across several countries, improving linear growth in children under two years of age by 1 standard deviation adds about half a grade-level to school attainment41.

Micronutrient supplementation for children with HIV infection

A recent systematic Cochrane review summarized the available evidence on micronutrient supplementation for children with HIV infection42. The authors conclude that both Vitamin A and zinc supplementation are safe and carry benefits for children with HIV infection (in particular, zinc appears to have similar benefits in terms of reducing death due to diarrhea in children with HIV as in children without HIV infection. Finally, Cochrane suggests that multiple micronutrient supplements have some clinical benefit in poorly nourished, HIV-infected children.

Recent research on fortification with specific micronutrients

Iodine fortification

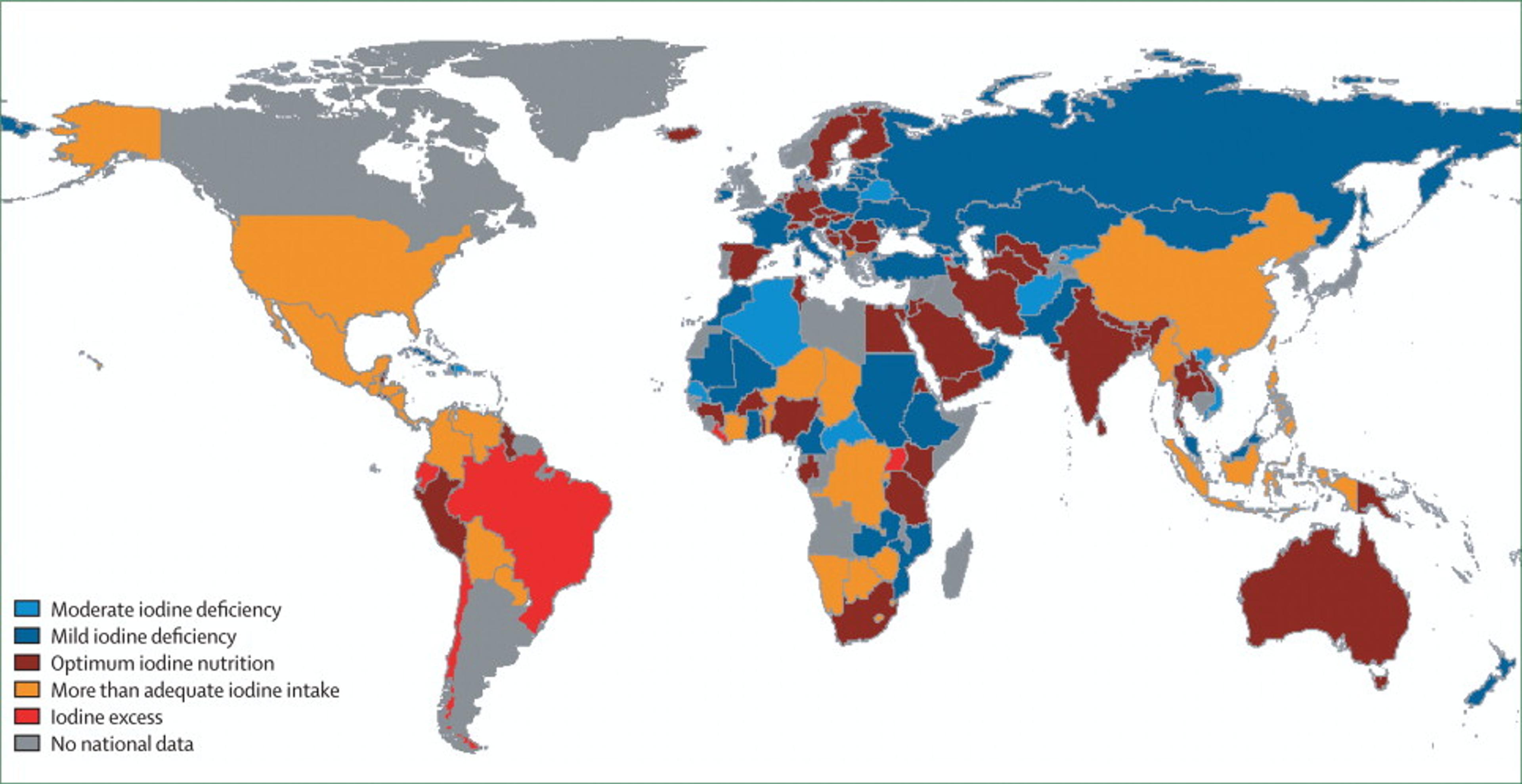

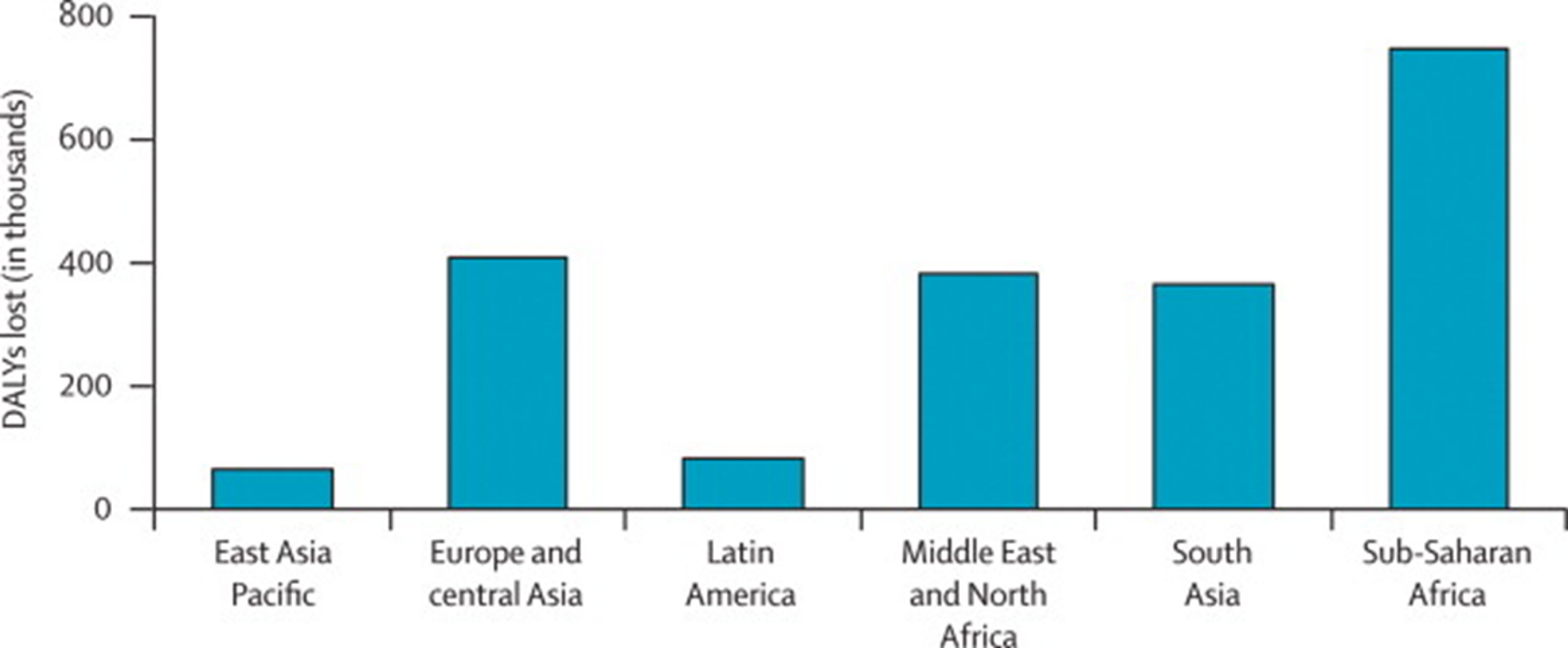

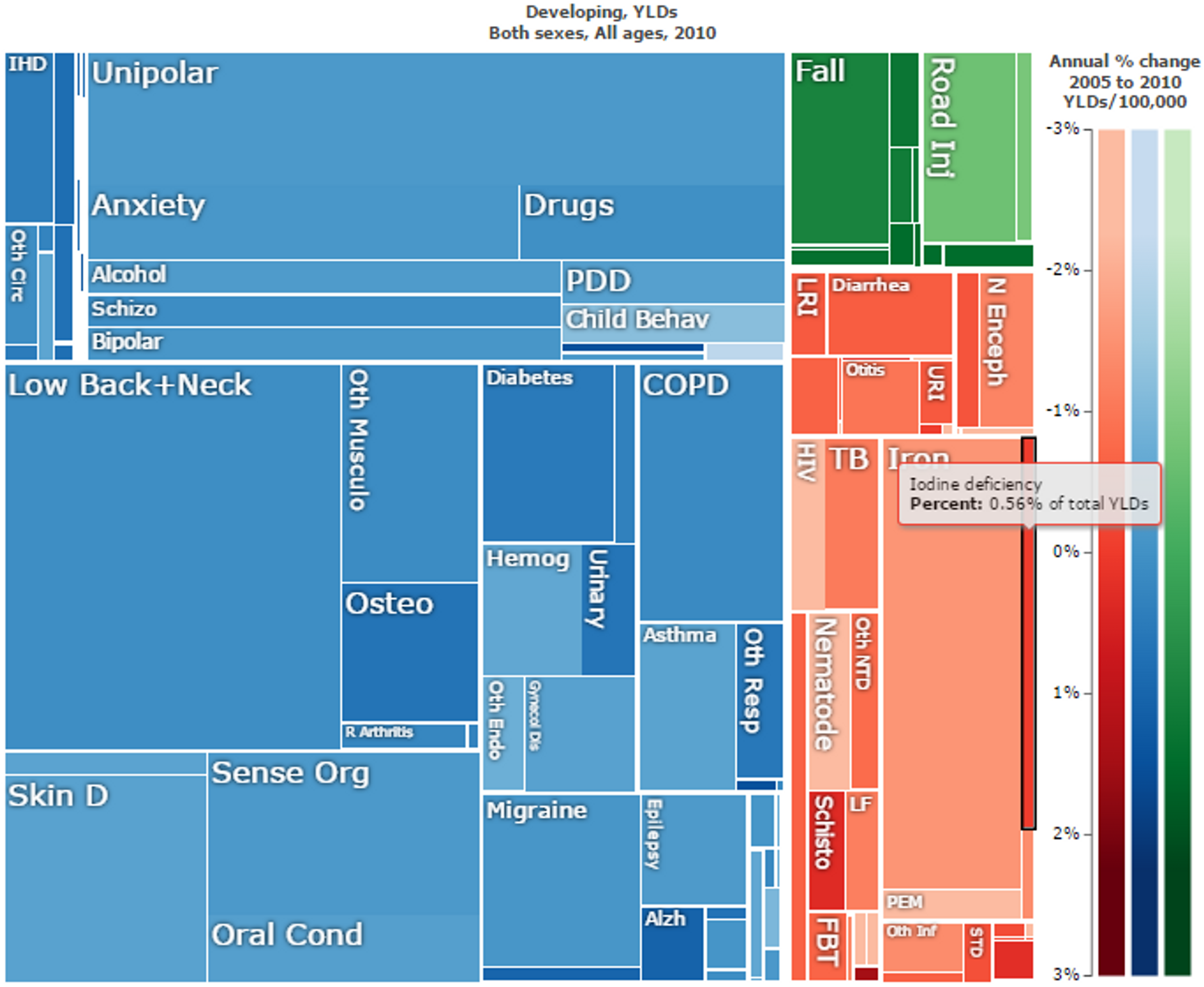

Iodine deficiency disorders are prevalent in many African countries (see Figure 5), where they make up a substantial part of the overall disease burden (see Figures 6 and 7).

Figure 5: Iodine nutrition based on the median urinary iodine concentration, by country43

Figure 6. Disability-adjusted life years (DALYs) (thousands) lost due to iodine deficiency in children younger than 5 years of age, by region44

Figure 7: Overall “Years Lost due to Disability (YLD)” in developing countries. Iodine deficiency are marked in black and make up 0.56% of the total YLDs. Figure adapted with ‘Global Burden of Disease Compare tool’- see http://ihmeuw.org/3a5v . © 2013 University of Washington - Institute for Health Metrics and Evaluation (Global burden of Disease data 2010, released 3/2013)

Benefits and Risks of Iodine

One risk of iodine supplementation is the possibility of iodine excess. Iodine excess has been suggested to cause hyper- and hypothyroidism, goitre and thyroid autoimmunity, effects which can occur even near the upper recommended daily intake of iodine45. In some instances, thyroid autoimmunity and hypothyroidism have been observed after the introduction of salt iodization programmes46.

However, most researchers agree 474849 that the available evidence suggests that the benefits of correcting iodine deficiency (chiefly reduction of goitre and hypothyroidism—both too little and too much iodine can be bad for the thyroid), far outweigh the risks of iodine supplementation, although dosing must be handled with care.

One review concludes that most individuals suffer no disturbance from iodine excess, iodine-induced disturbances are mostly transient and easily managed, and that iodine-induced hyperthyroidism, in particular, disappears from the population within a few years of properly dosed iodine supplementation50.

Similarly, a recent systematic review and meta-analysis on the effects and safety of salt iodization51 also concludes that benefits outweigh costs. The review finds that in certain contexts, iodization of salt at the population level may cause a transient increase in the incidence of hyperthyroidism (though not hypothyroidism). However, on the benefits side, evidence suggests that salt fortification causes moderate to low reductions in the incidence of goitre (moderate), cretinism (moderate), low cognitive function (low) and urinary iodine concentration (moderate). Based on this evidence a recent WHO report52 strongly recommends that all salt be fortified with iodine as a safe and effective strategy to prevent and control iodine deficiency disorders for all populations.

Effect of Iodine on (mental) development in children

Iodine is crucial for normal physiological and cognitive growth and development of children53. A recent meta-analysis analyzed randomized controlled trials and found that iodine-fortified foods are associated with increased urinary iodine concentration among children54. Another recent systematic review and meta-analysis looked at the effects of iodine supplementation on mental development of young children under 555. The authors concluded that evidence from recent studies suggests iodine-deficient children suffer a loss of 6.9-10.2 IQ points as compared with children who are not iodine-deficient. However, the authors caution that some study designs were weak and call for more research on the relation between iodized salt and mental development. Another recent cluster randomized trial investigated the effectiveness of iodized salt programs to improve mental development and physical growth in young children under 3. The trial found that the treatment group had higher scores on three out of four intelligence and motor tests. Although these results appear to provide support for the benefits of salt iodization programmes, it is worth noting that the study was funded by the Micronutrient Initiative, a non-profit agencies that works to eliminate vitamin and mineral deficiencies in developing countries, which may have biased the results56. Another recent natural experiment showed that in iodine-deficient regions of the United States in the 1920s, iodization raised IQ scores by 15 points and the average IQ in the United States by 3.5 points57.

A recent double-blind, randomised, placebo-controlled trial compared direct iodine supplementation of infants versus supplementation of their breastfeeding mothers58. They found direct supplementation of infants to actually be less effective in improving infant iodine status than giving supplements to the mothers. This suggests that breastfeeding mothers pass on improved iodine status to their children. Will this effect generalize to salt iodization programmes in addition to direct supplementation? A systematic review examined iodine nutrition status among lactating mothers in countries with iodine fortification programmes (the review did not look at iodine status of the mother’s infants). The review concluded that although salt iodization is still the most feasible and cost effective approach for iodine deficiency control in pregnant and lactating mothers, iodine status in lactating mothers in most countries with voluntary programmes, but even in areas with mandatory iodine fortification is still within the iodine deficiency range, and so iodine supplementation in daily prenatal vitamin/ mineral supplements in lactating mothers is needed59. We assume that in the absence of daily prenatal iodine supplements, iodine fortification, which is also less costly as an intervention, will at least contribute to bettering the iodine status of mothers and their children. Similarly, another recent study from Turkey concluded even after 8 years after introduction of mandatory iodization programmes iodine intake is in pregnant women is still inadequate60.

Iodine loss due to storage and cooking

The iodine content of iodised table salt can decline over the course of long-term storage61. Givewell has voiced concern that there might be substantial loss of iodine in salt, which could potentially render the iodization ineffective62. We will now first review the evidence of the relative decrease in iodine content and whether a substantial amount of iodine remains, so that fortification programmes can adjust the absolute iodine content to take into account losses during storage.

One paper investigated this issue and concluded that iodine fortification is at least somewhat robust to storage. After 3.5 years of storage in sealed paper bags at room temperature in high humidity (30%–45%), the salt only lost 58.5% of its iodine content63. A more recent study looked at loss under higher humidity settings with unlimited airflow, which is perhaps a more realistic setting for a rural household64. The authors found that after 5 months of table salt storage in open jars at high humidity (90%), iodine losses rose to 70%. However, some storage procedures may mitigate these effects.

A study in Ethiopia investigated how this loss of iodine propagates through the supply chain from manufacturer to consumer. The study found that the concentration of iodine in the sampled salts decreased by 57% from the production site to the consumers. They concluded that due to iodine loss, 63% of adults, and 90% of pregnant women, were at risk of insufficient iodine intake.65

Food preparation can also affect iodine loss: one study found that, in the lab, after cooking table salt for 24 hours at 200°C, iodine loss was only 58.46%66. Another study looked at iodine content in different soups during cooking for 70 min at 100 degrees Celsius and found that bioavailability of iodine still fulfilled daily requirements67. Thus, as real-world cooking conditions in households are likely to be much more favourable, iodine loss is during cooking should not be a concern.

The WHO advises that iodine losses under local conditions of production, climate, packaging and storage should be taken into account and additional amount of iodine should be added at factory level68. PHC has told us that they take local storage and cooking conditions into account when determining fortification levels for iodine69, as well as for other nutrients, particularly vitamin A. Specifically, PHC assesses current and local consumption and storage conditions either by conducting their own assessment, relying on data from the World Food Programme, or adapting ECSA (East, Central, and Southern Africa) fortification standards that are specifically tailored for regional consumption, cooking, and storage patterns.

Iron fortification

Iron-deficiency anaemia causes around 45 (31–65) million DALYs a year70. For comparison, malaria causes 83 million DALYs (63–110)71. Iron deficiency anaemia also makes up a major part of years lived with disability (YLD) in developing countries (see Figure 8).

Figure 8: Overall “Years Lost due to Disability (YLD)” in developing countries. Iron deficiency anaemia is marked in black and makes up 6.76% of the total YLDs. Figure adapted with ‘Global Burden of Disease Compare tool’- see http://ihmeuw.org/3a5g . © 2013 University of Washington - Institute for Health Metrics and Evaluation (Global burden of Disease data 2010, released 3/2013)

Many trials that have looked into iron supplementation and fortification have documented improvements in hemoglobin status (and thus improvement of anaemia). A recent systematic review analysed data from 60 trials and concluded that food iron fortification resulted in a significant increase in hemoglobin (0.42 g/dl; 95% CI: 0.28, 0.56; P < 0.001) and serum ferritin but no effect on serum zinc concentrations, infections, physical growth and mental development 7273.

Because iron is important for development the effects of iron on children have been studied separately. Specifically, there have been four recent reviews and/or meta-analyses of RCTs that have investigated the effects of iron supplementation and fortification in children.

A recent systematic review and meta-analysis of randomized controlled trials on the effects of iron-fortified foods on haemoglobin levels in children under 10 years of age, showed that intake of iron-fortified foods was associated with haemoglobin concentration74. The authors concluded that iron-fortified foods could be effective in reducing iron deficiency anaemia in children.

Another systematic review examined 37 RCTs on iron supplementation in primary-school-aged children, and found evidence for improved haemoglobin response75.These results were congruent with findings of similar studies in adult populations76. Another, more recent systematic review and meta-analysis of RCTs looking at the effects of daily iron supplementation in children in low- and middle-income settings similarly found that iron improved cognition, height, weight, iron deficiency and anaemia and is well-tolerated77. However, the evidence for effectiveness in younger children is less robust. A systematic review and meta-analysis examined randomised controlled trials of daily iron supplementation in 4–23 month-old children and found evidence for reduction of anaemia. The study concluded, however, that benefits for development of cognition, motor skills, height, and weight were uncertain78.

Do the effects of supplementation generalize to cheaper population-level fortification of staple foods interventions?

One very recent study evaluated the impact of Costa Rica's fortification program on anaemia in women and children79. Even though this was not a randomized controlled trial, the particulars of the data80 strongly hint at a causal relationship between fortification and reduced anaemia . The results suggest that fortification markedly improved iron status and substantially reduced anaemia.

Another very recent study81 used national-level surveys to conduct a cross country comparison of micronutrient fortification practices and anaemia rates in non-pregnant women. The authors suggest that, after controlling for confounding effects (such as level of development and endemic malaria), anaemia prevalence has decreased significantly in countries that fortify flour with micronutrients, while remaining unchanged in countries that do not, so that for each year of flour fortification anaemia prevalence is reduced by 2.4%, i.e. 2.4% fewer women are anemic82.

There are two separate systematic Cochrane reviews underway that summarize the evidence for potential benefits of flour fortification with iron against anaemia, one on maize flour fortification 83 and one on wheat flour fortification84. PHC conducts maize flour fortification in countries such as Malawi and wheat flour in countries such as Zimbabwe, so the results of these reviews will be interesting.

Does iron fortification increase malaria?

It has been hypothesized that elevated iron status can increase malaria risk in areas where malaria is endemic. This possibility is concerning for some of PHC’s projects, such as the iron fortification programme in Malawi, where malaria is endemic8586. The hypothesis is biologically plausible, because the malaria parasite needs iron to function (indeed, it has been suggested that anemia might be an evolved response to fight malaria and other parasites)87. Studies of increased iron intake (via supplementation or fortification) in malaria-prone areas have yielded conflicting evidence on this question. We review findings from specific studies below, but whether increased iron intake increases malaria risk is a topic of ongoing research, and researchers call for greater study of the mechanisms underlying the negative effects of iron reported in some trials88.

One trial from Tanzania showed an increased risk of mortality among children after iron supplementation89. As a result, in 2006 the WHO changed its recommendations on iron supplementation for children in areas where malaria is endemic, from universal to targeted supplementation for iron-deficient children only90. .

However, a recent systematic Cochrane review from 2011 suggested that iron supplementation does not adversely affect children living in malaria‐endemic areas91 and recommended that routine iron supplementation should not be withheld from children living in malaria-endemic countries. Another systematic review and meta-analysis from 2013 summarizing randomised controlled trials on the effect of daily iron supplementation on health in children92 found no evidence that daily oral iron supplementation increased malaria, diarrhoea, or respiratory infection, but did identify evidence that iron increased fever (the authors note that however, that few studies were done in malaria-endemic areas or specifically reported malaria-related outcomes).

However, a more recent study from 2012 found that iron status predicts malaria risk in children93 and another suggests that iron deficiency might protect against severe malaria and death in children94.

Another recent trial from 2013 did not find that iron increased the incidence of malaria among children in a malaria-endemic setting in which insecticide-treated bed nets were provided and appropriate malaria treatment was available95.

The most recent systematic review that we could find96 suggests that overall, weighting positive and negative effects of iron, improving iron status reduces mortality risk similarly in children with malaria and in those without malaria97.

Given the conflicting evidence in the literature, we have asked experts in this field for their opinion on whether iron fortification of wheat and maize flour in Malawi (as conducted by PHC) is on the whole harmful or beneficial. One such expert said that the question is currently unanswerable, but that most experts in the field would tend to assume that fortification will be safer than supplementation.The same expert noted, however, that this hypothesis is unproven and that research on the relative benefits and risks of fortification versus supplementation is ongoing. He concluded that, despite these uncertainties, he thinks it is fairly safe to assume that iron fortification is likely to be benign98. Another international expert suggested that the effects of iron fortification on malaria risk are not as well studied as those of iron supplementation, though an upcoming randomized controlled trial in Malawi should provide further evidence on the topic. The expert noted that that the risks associated with iron probably depends not only on the iron status of the host, but also on the manner/dose of giving the micronutrients99.

The WHO also suggests that conclusions from trials of iron supplementations risks should not be extrapolated to fortification or food-based approaches for delivering iron, where the patterns of iron absorption and metabolism may be substantially different100.

We have also asked PHC about this issue and they have reported that they are aware of the potential risks in malaria-prone areas, and are regularly consulting with national and international consultants (e.g. from the WHO) as well closely monitoring the current research on this topic and working with malaria prevention programmes on the ground.

In sum, we think that, placing more weight on expert opinion and the most systematic review that we could find, iron fortification is very likely to be beneficial as overall mortality and morbidity is reduced, even though there is some probability that it might increase malaria incidence or severity, however, this is effect is probably small.

Vitamin A

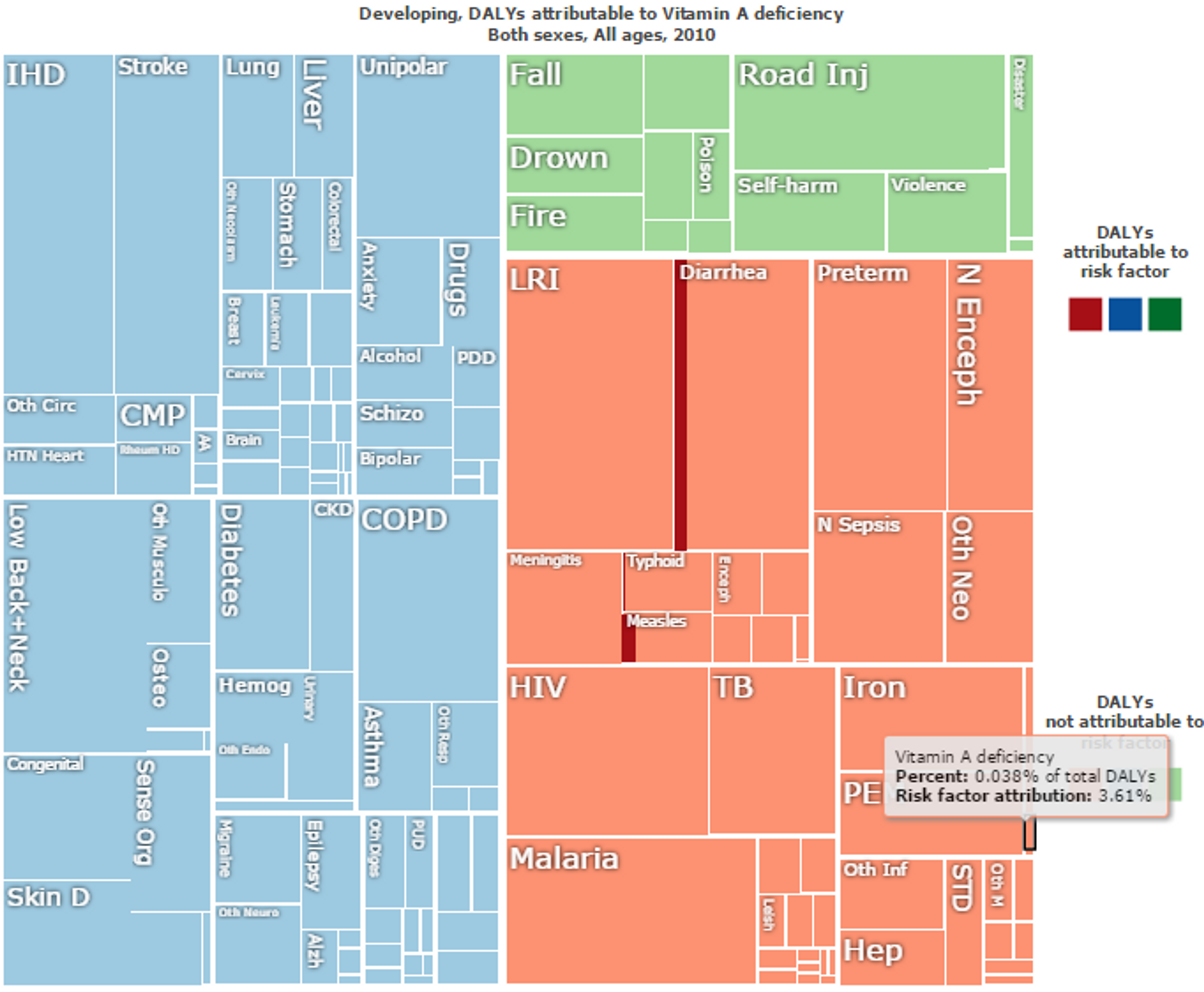

Vitamin A deficiency is the leading cause of preventable blindness in children and increases the risk of disease and death from severe infections101. In the recent Lancet series on maternal and child undernutrition, deficiencies of vitamin A were estimated to be responsible for 600,000 deaths per year and, together with zinc deficiency, a combined 9.8% of global childhood Disability-Adjusted Life Years (DALYs) (most deaths were due to diarrhea and measles: see Figure 9)102103. Given that PHC’s work in Burundi has led to the start of vegetable oil fortification with vitamin A104, below we review the evidence for the cost-effectiveness of vitamin A fortification programmes.

Vitamin A fortification has been shown to be very cost-effective. A recent study from Uganda showed that the cost-per-DALY-averted for vitamin A fortification is only US $82 for sugar fortification and $18 for oil fortification105. A recent study suggested that even during prolonged deep frying with cooking oil, 45% of the fortified vitamin A remains intact, which is sufficient to meet daily requirements106. In line with this, a recent correlational study finds that it is strongly plausible that oil fortified with vitamin A increases vitamin A status in Indonesian women and children107.

Givewell has extensively reviewed Vitamin supplementation interventions108 among them a large-scale trial109 that found no reduction of mortality. This trial has led some researchers to suggest that Vitamin A policies should be revised, and that there should be more of an emphasis on vitamin A fortification as opposed to (biannual) vitamin A supplementation110. Other researchers have pointed to some severe limitations of the large trial and suggest that abandonment of vitamin A supplementation programmes is not prudent111. Moreover, an accompanying meta-analysis112 suggested that taking into account all available evidence — including the large trial — simply yields a smaller estimate of 11% mortality reduction (rather than 25% as previously assumed). Benefits of vitamin A fortification are well established in western countries113 and a Cochrane review to examine the effects of staple food fortification with vitamin A for preventing vitamin A deficiency is currently being conducted114.

Figure 9: Overall “Disability Adjusted Life Years (DALYs)” lost in developing countries. Vitamin A deficiency direct impact is marked in black and make up 0.038% of the total DALYs. Risk factor attributions of Vitamin A deficiency for other diseases are in red. For instance, Vitamin A deficiency accounts for 10% of the risk factor of DALYs lost to do Diarrhea and 15% of DALYs lost due to Measles. Figure adapted with ‘Global Burden of Disease Compare tool’- see http://ihmeuw.org/3ba4© 2013 University of Washington - Institute for Health Metrics and Evaluation (Global burden of Disease data 2010, released 03/2013)

Vitamin A deficiency - disease interactions and risk factors

Vitamin A deficiency interacts with other conditions, increasing the risk of contracting other diseases. For instance, DNA damage from micronutrient deficiencies is likely to be a major cause of cancer115 and a recent meta analysis clearly demonstrated that low doses of vitamins can significantly reduce the risk of gastric cancer, especially vitamin A among other vitamins116. (See also our cause report on cancer)

Vitamin A deficiency is also associated with hepatitis C infection and not being responsive to antiviral therapy117.

Research also suggests that vitamin A supplementation is beneficial for thyroid function and size — and potentially more so when in combination with iodized salt118.

Studies have also linked vitamin A deficiency to infection with certain types of worm infections (i.e. soil-transmitted helminth Ascaris lumbricoides), with robust evidence for a relationship between high-intensity ascaris infection and lower levels of vitamin A119.

Zinc

Zinc deficiency is a significant public health problem in developing countries120. In the recent Lancet series on maternal and child undernutrition, deficiencies of vitamin A and zinc were estimated to be responsible for 500,000 deaths per year, respectively, and together with zinc deficiency, a combined 9.8% of global childhood Disability-Adjusted Life Years (DALYs)121.

Zinc supplementation to children is cost-effective with one recent study estimating the cost per DALY averted at US$606 for pill supplementation, $1,211 for micronutrient biscuits, and $879 per DALY saved for water filtration systems122.

A recent trial from China123 showed that zinc-fortified flour increases zinc levels in rural Chinese women. A Cochrane Review summarizing the evidence on fortification of staple foods with zinc for improving zinc status and other health outcomes in the general population is currently underway124. Finally, a recent trial showed that zinc supplementation might lead to a significant reduction in respiratory morbidity among children with acute lower respiratory infections in zinc-poor population125.

Folic Acid

Neural tube defects affect an estimated 320,000 newborns worldwide annually126. A systematic review Cochrane review concludes that folic acid supplementation, alone or in combination with vitamins and minerals, prevents neural tube defects127. A recent trial from China128 also showed that folic acid fortified flour increases folic acid levels in rural chinese women.

In addition, a recent systematic review129 on the on the Impact of folic acid fortification of flour on neural tube defects concludes that this intervention had a major impact on neural tube defects in all countries where this has been reported. For instance, the authors report that folic acid fortification in Chile showed a 55% reduction in neural tube defect prevalence between 1999 and 2009 (this is a fairly typical effect: countries that mandate folic acid fortification of wheat flour report an average reduction of 46% in NTD birth prevalence130). We found several studies that were not included in this review that show similar effects: after food fortification with folic acid is introduced, or folic acid intake is increased, folate levels increase and neural tube defects often decrease131132133134.

Neural tube defects place a significant economic burden on the healthcare system and the wider society135 and for this reason folic acid fortification also has a very good benefit-cost ratio of 12–48:1, depending on the country136.

A recent meta-analysis and systematic review found no evidence that folic acid increase causes increase in bowel cancer137 and another study found no increase in breast cancer or other cancers138.

Is biofortification more effective than industrial fortification?

Biofortification refers to the process of selectively breeding or genetically engineering crops to have higher micronutrient content. Even though this approach has not been practised so far, it has been suggested that it could potentially be more cost-effective and might reach a wider population than traditional industrial fortification of staple foods as conducted by PHC139140. However, according to a recent paper by the Copenhagen Consensus Center141, in order to establish the cost-effectiveness of large scale biofortification, nutritional effectiveness must first be verified. In addition, acceptance of new plant varieties among farmers and consumers must be secured in order for biofortification to be a viable health intervention.[^fn-1]: Lee, Sunmin et al. "Nutrient Inadequacy Is Prevalent in Pregnant Adolescents, and Prenatal Supplement Use May Not Fully Compensate for Dietary Deficiencies." ICAN: Infant, Child, & Adolescent Nutrition (2014): 1941406414525993.