Beneficial artificial intelligence

Artificial intelligence (AI) might be the most important technology we ever develop. Ensuring it is safe and used beneficially is one of the best ways we can safeguard the long-term future.

AI is already incredibly powerful: it’s used to decide who receives welfare, whether a loan is approved, or whether a job applicant receives an interview (which may even be conducted by an AI).

It’s also a research tool. In 2020, an AI system called AlphaFold made a “gargantuan leap” towards solving problems in protein folding, which scientists have been working on for decades.

Despite these impressive accomplishments, AIs don’t always do what we want them to. When OpenAI trained an agent to play CoastRunners, they rewarded it for increasing its points, expecting to incentivise it to finish the race as fast as possible. As you can see in the video below, the AI instead realises it can achieve a higher score by repeatedly hitting the same target, never crossing the finish line.

Unfortunately, unintended consequences like these are not limited to amusing failures in outdated video games.[0] Amazon used an AI to screen resumés, thinking this would increase the fairness and efficiency of their hiring process. Instead, they discovered the AI was biased against women. It penalised resumés containing words like "women's" and "netball," while favouring language more frequently used by men, such as "executed" and "captured." This was not intended, but that may be of little comfort to the women whose applications were rejected because of their gender.

Ensuring AI is used to benefit everyone is already a challenge, and it's critical we get it right. As AI becomes more powerful, so does its scope for affecting our economy, politics, and culture. This has the potential to be either extremely good, or extremely bad. On the one hand, AI could help us make advances in science and technology that allow us to tackle the world's most important problems. On the other hand, powerful but out-of-control AI systems ("misaligned AI") could result in disaster for humanity. Given the stakes, working towards beneficial AI is a high-priority cause that we recommend supporting, especially if you care about safeguarding the long-term future.

Why is ensuring beneficial AI important?

We think ensuring beneficial AI is important for three reasons:

- AI is a technology that is likely to cause a transformative change in our society — and poses some risk of ending it.

- Relative to the enormous scale of this risk, not enough work is being done to ensure AI is developed safely and in the interests of everyone.

- There are things we can do today to make it more likely that AI is beneficial.

What is the potential scale of AI's impact?

In 2019, AI companies attracted roughly $40 billion USD in disclosed investments. This number has increased over the last decade, and is likely to continue growing.

Already the results of these investments are impressive: in 2014, AI was only able to generate blurry, colourless, generic faces. But within just a few years, its synthetic faces became indistinguishable from photographs, as you can see below.

Credit to Brundage et al. (2018).

This rate of progress seems astonishing, and may lead you to think that by following this trend, AI is likely to become even more impressive in the future. Yet, we should be careful to use a measure as subjective as "impressiveness" to predict future AI capabilities, as reasonable people may disagree about what counts as impressive. Also, there are other examples of AIs failing in quite trivial ways (such as the CoastRunners example above). But there are other reasons to think that AI will become increasingly powerful — potentially transformatively powerful — this century.

Transformative AI

Transformative AI is defined as AI that changes our society by an amount comparable to, or greater than, the Industrial Revolution. This is no small feat — the Industrial Revolution1 was a big deal. It massively increased our capacity to produce (and destroy) things we value. Its effects were so significant that you can see roughly when it occurred by looking at historical data of economic output and population.

The Industrial Revolution can be dated to James Watt's patent for the steam engine in 1769, which is about when the massive increase in our economic output and growth in population began. For AI to be that significant is a big claim — what could it look like?

One way AI could become transformative is if it becomes as intelligent as humans — this is often called "artificial general intelligence" (or "AGI"). This may seem very far away, but surveys show that most experts in AI believe that it is more likely than not to happen this century.2

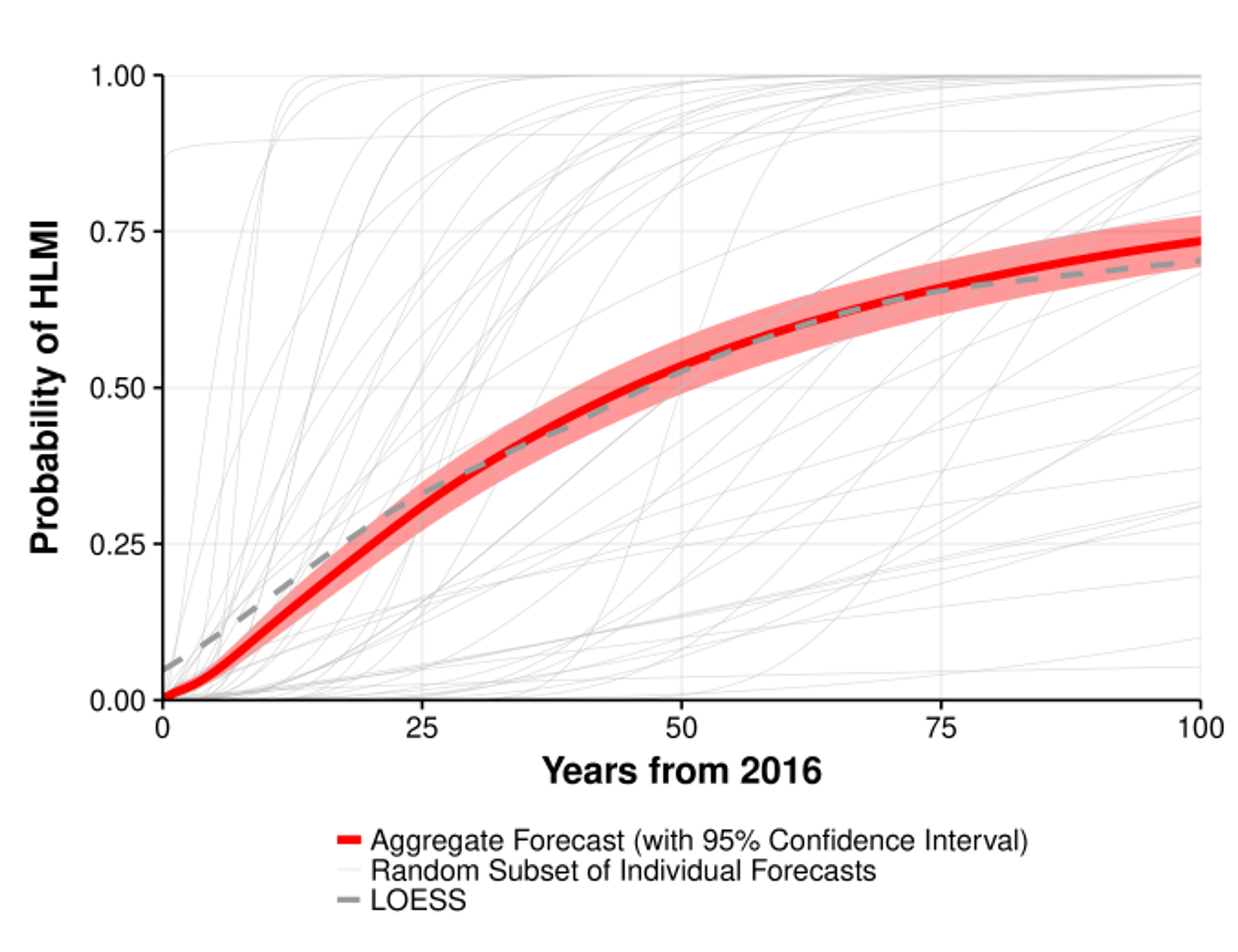

The graph below shows experts' probability estimates for when there will be machine intelligence that can accomplish every task better and more cheaply than human workers.3

From Grace et al., 2018

This would radically change the world we live in, resulting in explosive economic growth and an abundance of wealth, but with no guarantee that it will be shared equitably. AGI this powerful also has risks, because its interests may become misaligned with ours. The problem goes back to the CoastRunners example, where the agent spun a boat around in circles — we have not successfully worked out how to make sure AI behaves in ways we'd want and expect.

AI as an existential risk

According to Toby Ord, one of the leading experts on the risks facing humanity (and a co-founder of Giving What We Can), misaligned AI poses an existential risk.4 An existential risk is something that could lead to the permanent destruction of human potential, and the most notable example of this kind of risk is extinction. Thinking that AI could pose an extinction risk might seem like something from a movie. But if you agree with experts that AI may become as smart (or smarter) than humans this century, there are several reasons to be concerned.5

The first reason is quite straightforward: the last time one group became more intelligent than another, it did not go well for the less intelligent group. Human beings have dominated the planet — not because of our size or strength, but because of our intelligence. There are roughly 200,000 people for every chimpanzee, our closest ancestor and one of the next-most intelligent species on Earth. Chimpanzees are currently endangered, and their survival depends on our choices, not theirs. Though an imperfect analogy, the concern is that we may at some point be in the same position as the chimpanzees with respect to powerful AI.

The next concern is that we don't yet know how to make AIs — even highly capable ones — behave as we want them to. One part of this is called the specification problem.6 The idea is that it's difficult to precisely specify what we want an AI to do. Stuart Russell (a computer scientist) explains how this could devastate humanity with the following example.7

Suppose we have a highly intelligent AI, and we give it the goal of curing cancer. Diligently, the AI develops its medicine, and promises that it will kill the cancer cells within a matter of hours. We may be horrified to see that the AI was right — but it kills the patients too. The AI reasons that the simplest way of eliminating cancer is to kill the host.

This kind of scenario would be less likely to happen if we could simply investigate the AI's reasoning, asking it how it plans on achieving its goals. This is something AI safety researchers are working on, but have not yet worked out how to do in a robust manner.8 Unless we make progress on this kind of research, we are likely to face significant — even existential — threats from misaligned AI. Therefore, the potential scale of AI's negative impact in these worst-case scenarios is extremely large.

Is promoting beneficial AI neglected?

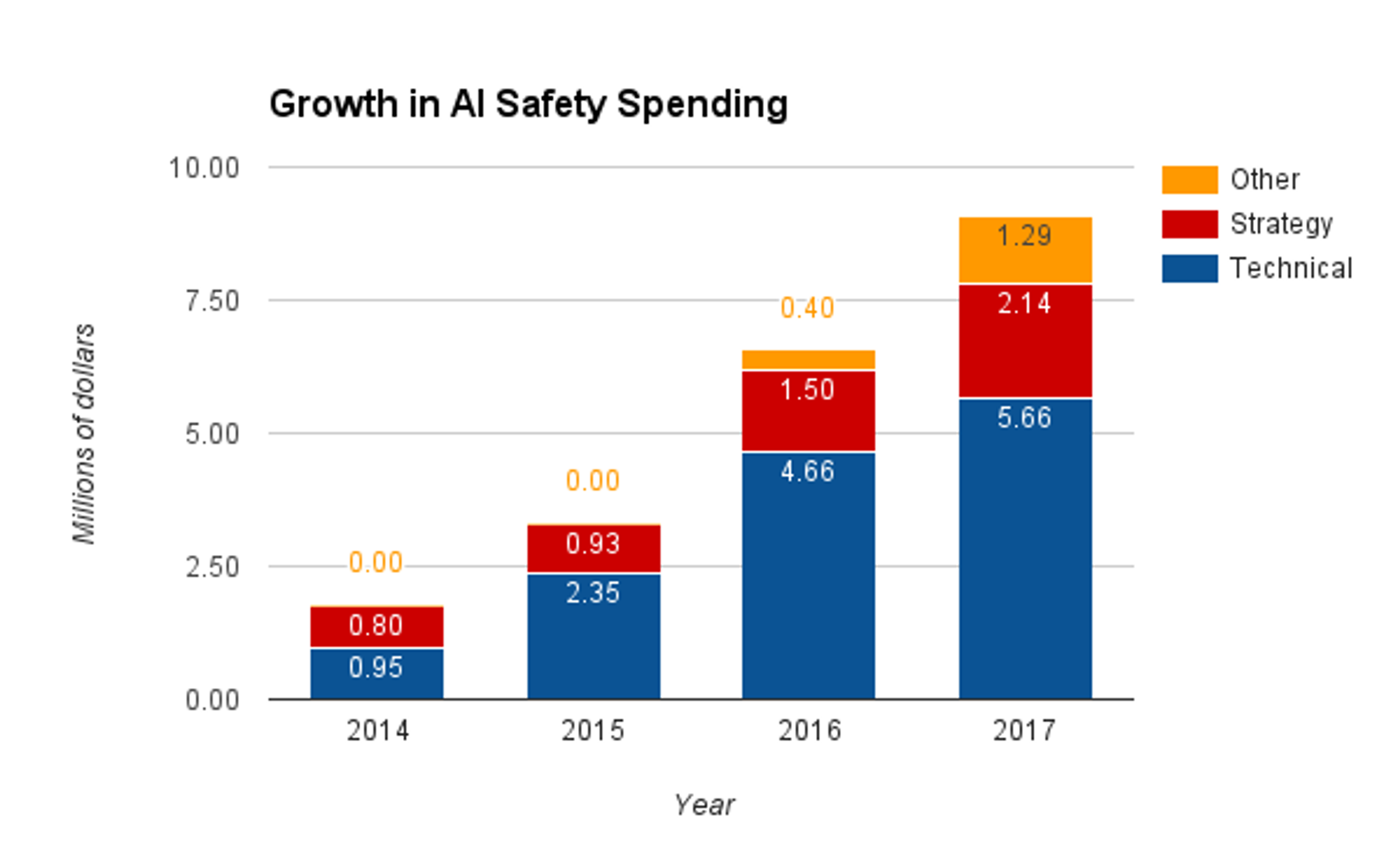

Historically, promoting beneficial AI was severely neglected. It has been estimated that in 2014, less than $2 million USD was spent on safety-specific AI research. However, by 2017, this had grown to nearly $10 million USD:

A more recent estimate suggests that in 2019, roughly $40 million USD was provided by donors and grantmakers to reduce potential risks from AI. This trend suggests that there is an increasing interest in funding work that promotes beneficial AI.9 This means that compared to our other high-priority causes, there is a smaller gap between the amount of funding organisations can effectively spend and the total amount of funding available.10

But we think that there is still room for additional funding to make a difference. Even with the recent increase in funding, AI receives far less than other causes with comparable scale.11 More importantly, as the field grows, we expect the amount AI safety organisations can effectively spend will increase. Therefore, promoting beneficial AI is neglected.

Is promoting beneficial AI tractable?

There are different ways to promote beneficial AI (described in the next section). However, given how new this area is, it's too early to confidently say whether these problems are easy, or even possible, to solve. Consider that, as of 2021, the two organisations we recommend donating to are under five years old. Given the substantial scale of the problem, it’s worth trying to make progress, even if we aren’t sure how fruitful our efforts will be.

How can we promote beneficial AI?

There are two challenges we need to solve to ensure AI is beneficial for everyone:

- The technical challenge: how can we make sure powerful AI systems do what we want them to?

- The political challenge: how can we ensure the wealth created by AI is distributed fairly, and incentivise AI companies to build AI safely?

Technical challenge: Ensuring AI systems are safe

To ensure powerful AI systems benefit us in the future, there are concrete technical problems we need to solve.12 Some of this has been discussed already, in the CoastRunners example, and the specification problem.

If you think these technical problems are a significant challenge that we could make progress on, we recommend donating to the Center for Human-Compatible Artificial Intelligence (CHAI). Their research agenda is to find a new model of AI in which the AI's objective is to satisfy human preferences. The hope is that by doing so, we can make progress on the specification problem described above. So far, CHAI has produced an extensive amount of published research, developed the field of AI safety by funding and training nearly 30 PhD students, and informed public policy. To read more about CHAI's successes so far, we recommend reading Founders Pledge's report.

Read more about the Center for Human-Compatible Artificial Intelligence.

Political challenge: Promoting beneficial AI governance

Whether we will be able to solve the technical problems discussed above depends, in part, on how successfully we govern AI’s development. If AI development only aims to make a profit, there is a risk we will see what Oxford Professor Allan Dafoe calls value erosion, where companies are incentivised to progress quickly, rather than safely. This is because they would capture a significant proportion of the rewards of powerful, safe AI systems — whereas if the AI is unsafe, they would only be one of many who pay the price.

Even if AI is safe in the technical sense — meaning it is performing as intended — we still need to make sure it is used to benefit everyone. There is some risk it would not be. Poorly governed but powerful AI systems could result in unprecedented wealth inequality, or lock in the (potentially undesirable) values of a handful of people, with no consultation from the public.13

These problems may seem abstract and mainly focused on hypothetical issues of AI systems with capabilities far beyond those that exist today. But as we saw with the gender bias in Amazon's algorithm, ensuring that today's AI is used beneficially is already a major challenge. If you think good governance around powerful AI is important, we recommend donating to the Centre for the Governance of AI (GovAI).

GovAI's aim is to provide foundational research that can be used to ensure highly advanced AI is developed safely and used to everyone's benefit. You can read their research agenda and annual report, and donate to them here. Already, their research has been published in top journals, referred to on major news outlets such as the BBC and The New York Times, and has informed policymakers in the US and in the European Union.14

Why might you not prioritise promoting beneficial AI?

In addition to reasons you might not prioritise safeguarding the long-term future in general, you may choose to prioritise other causes to improve humanity's long-term trajectory. Though we think there's a strong case for promoting beneficial AI, there has been a (perhaps exaggerated) history of false predictions about the topic.

One reason not to prioritise promoting AI is if you believe transformative AI is unlikely to occur this century. For example, you might not be convinced by the expert surveys cited earlier. Haven't these experts been wrong before? Why should we trust the people working in a field to have accurate views about how promising the field is? Though we think it is reasonable to take the views of experts into account when considering the future of a field, it also makes sense to be sceptical. For example, while these surveys often show that AI experts think transformative AI is likely this century, they also reveal that experts have not put much thought into this prediction. For example, some experts provided inconsistent responses to the same question when worded differently.

Open Philanthropy's worldview investigations team has been looking at what AI's capabilities are likely to be. Though their complete report is not yet published, their co-founder, Holden Karnofsky, has recently provided an overview of their findings. Karnofsky was originally sceptical about whether we should prioritise AI, and now the potential for transformative AI is one of the key reasons he believes we are living in the most important century. If doubting AI's future capabilities was the main reason for being sceptical about prioritising AI, we recommend checking out his overview of whether we are trending towards transformative AI. While we find it compelling, we also think that other high-priority cause areas we recommend (such as global health and development) have more certain and tested solutions. Depending on your worldview, you may choose to prioritise these kinds of problems over AI.

What are some charities, organisations, and funds trying to promote beneficial AI?

You can donate to several promising programs working in this area via our donation platform. Below, we've listed some orgs and funds working on promoting beneficial AI:

- Center for Human-Compatible Artificial Intelligence

- Centre for the Governance of AI

- Long-Term Future Fund

- Emerging ChallengesFund

- Giving What We Can's Risks and Resilience Fund

For our charity and fund recommendations, see our best charities page.

How else can you help?

Through your career! We recommend checking out 80,000 Hours' job board to see the kind of openings available, or reaching out to them for career advice.

You can also remain vigilant by finding ways to learn more about the area, taking opportunities to help when you see them, and connecting with other people interested in the topic.

Learn more

To learn more about beneficial AI, check out the following resources:

- Safeguarding the future executive summary (Founders Pledge)

- Intro to AI safety, remastered (Robert Miles)

- The most important century (Holden Karnofsky)

- Helen Toner on how emerging technologies could affect national security (80,000 Hours)

- Brian Christian on the alignment problem (80,000 Hours)

- Why governing AI is our opportunity to improve the future (Jade Leung)

Our research

This page was written by Michael Townsend. You can read our research notes to learn more about the work that went into this page.

Your feedback

Please help us improve our work — let us know what you thought of this page and suggest improvements using our content feedback form.